Top 10 Multimodal Models Revolutionizing AI in 2025

Exploring the frontier of AI that processes and integrates text, images, audio, and video

The Multimodal Revolution

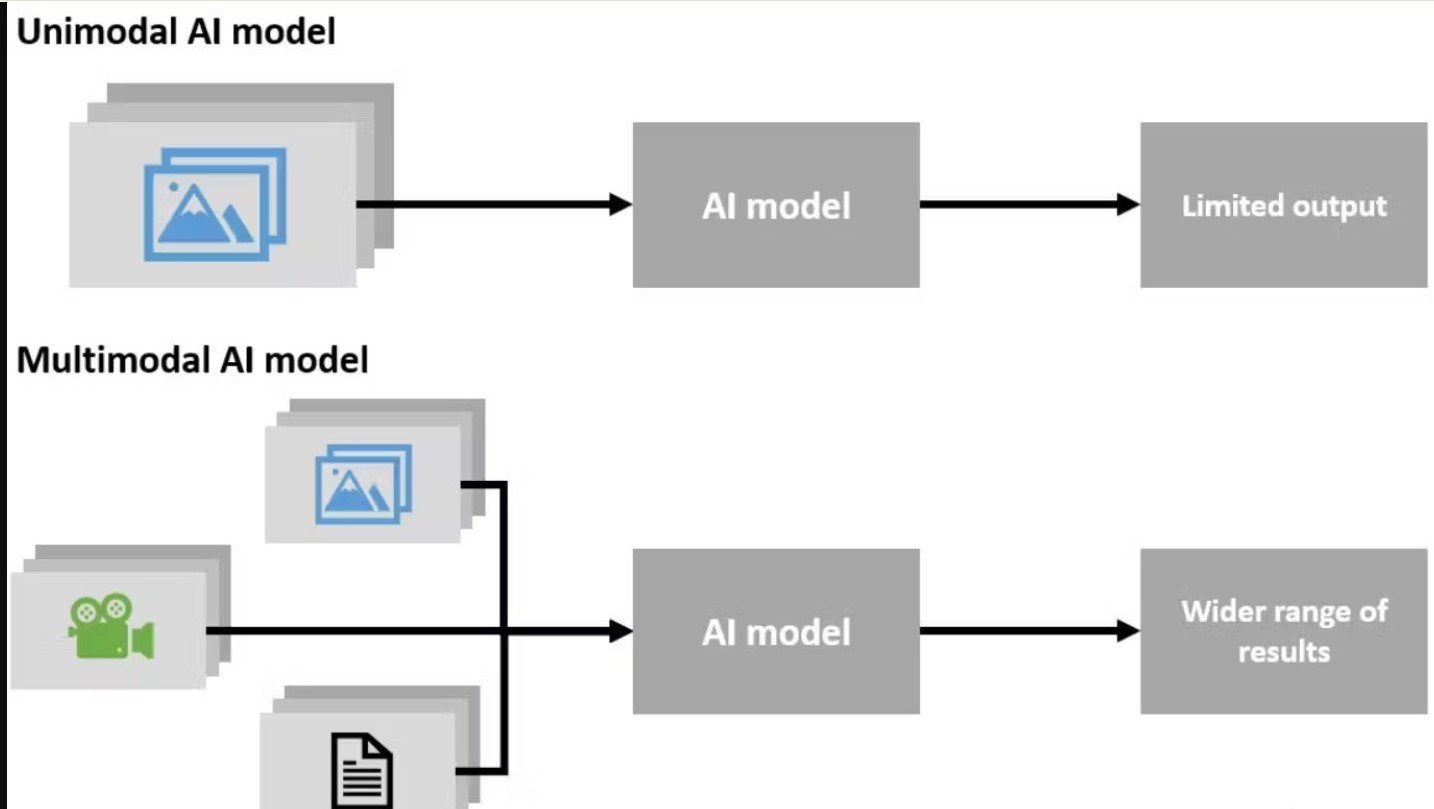

The current era is witnessing a significant revolution as artificial intelligence (AI) capabilities expand beyond straightforward predictions on tabular data. With greater computing power and state-of-the-art (SOTA) deep learning algorithms, AI is approaching a new era where large multimodal AI dominate the AI landscape.

Reports suggest the multimodal AI market will grow by 35% annually to USD 4.5 billion by 2028 as the demand for analyzing extensive unstructured data increases. These models can comprehend multiple data modalities simultaneously and generate more accurate predictions than their traditional counterparts.

In this article, we will discuss what multimodal AI are, how they work, the top models in 2025, current challenges, and future trends.

Multimodal AI Market Growth

How Multimodal AI Models Work

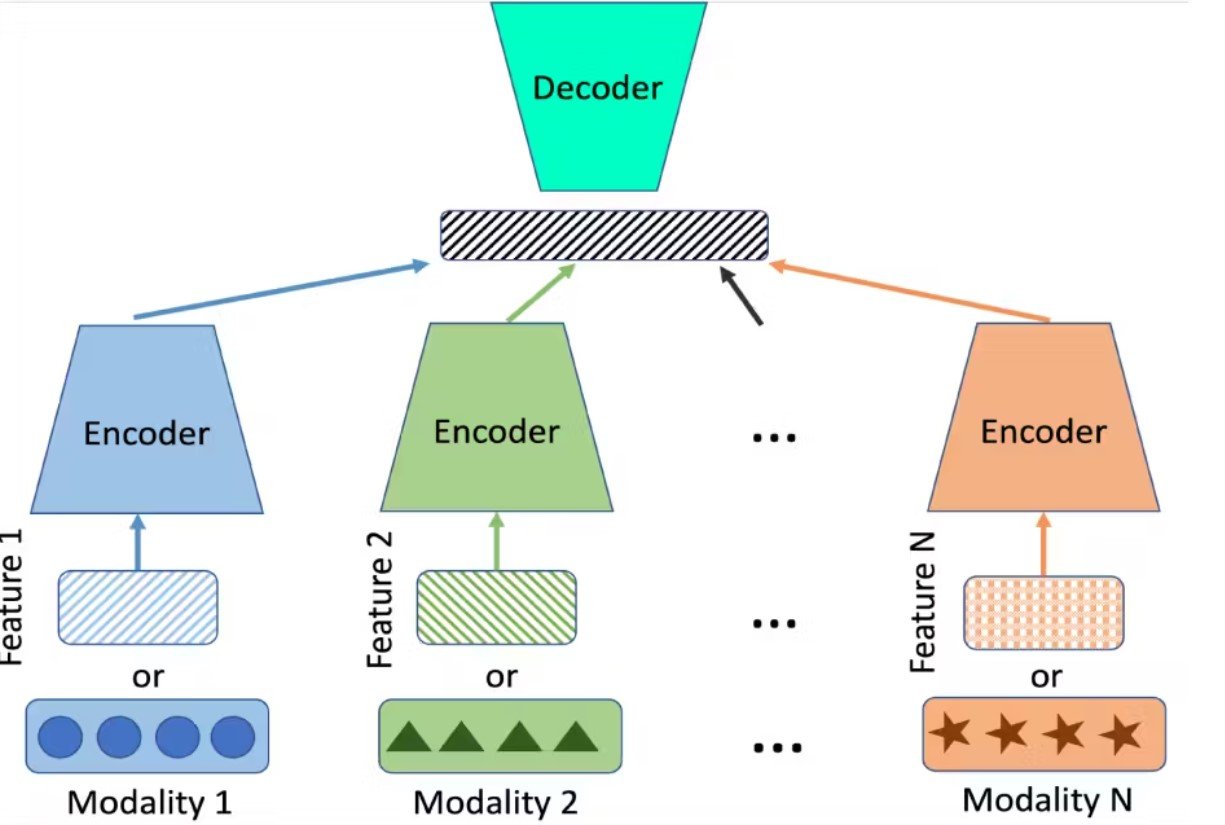

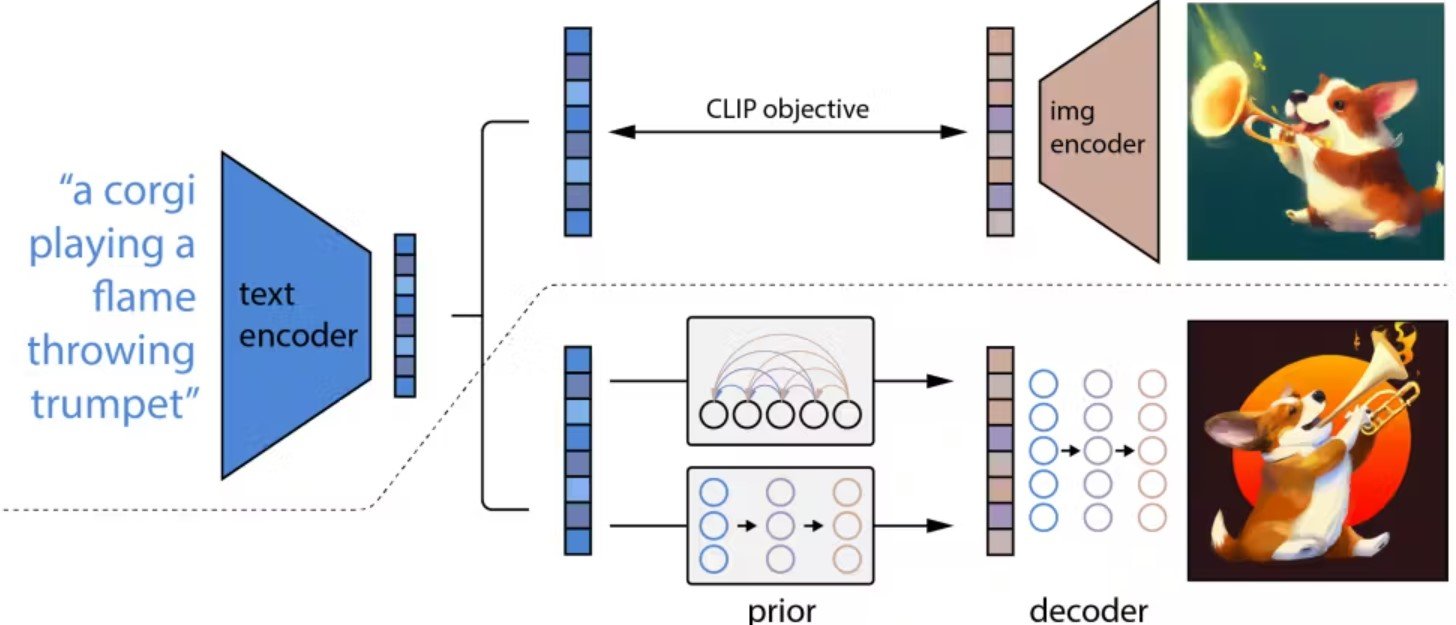

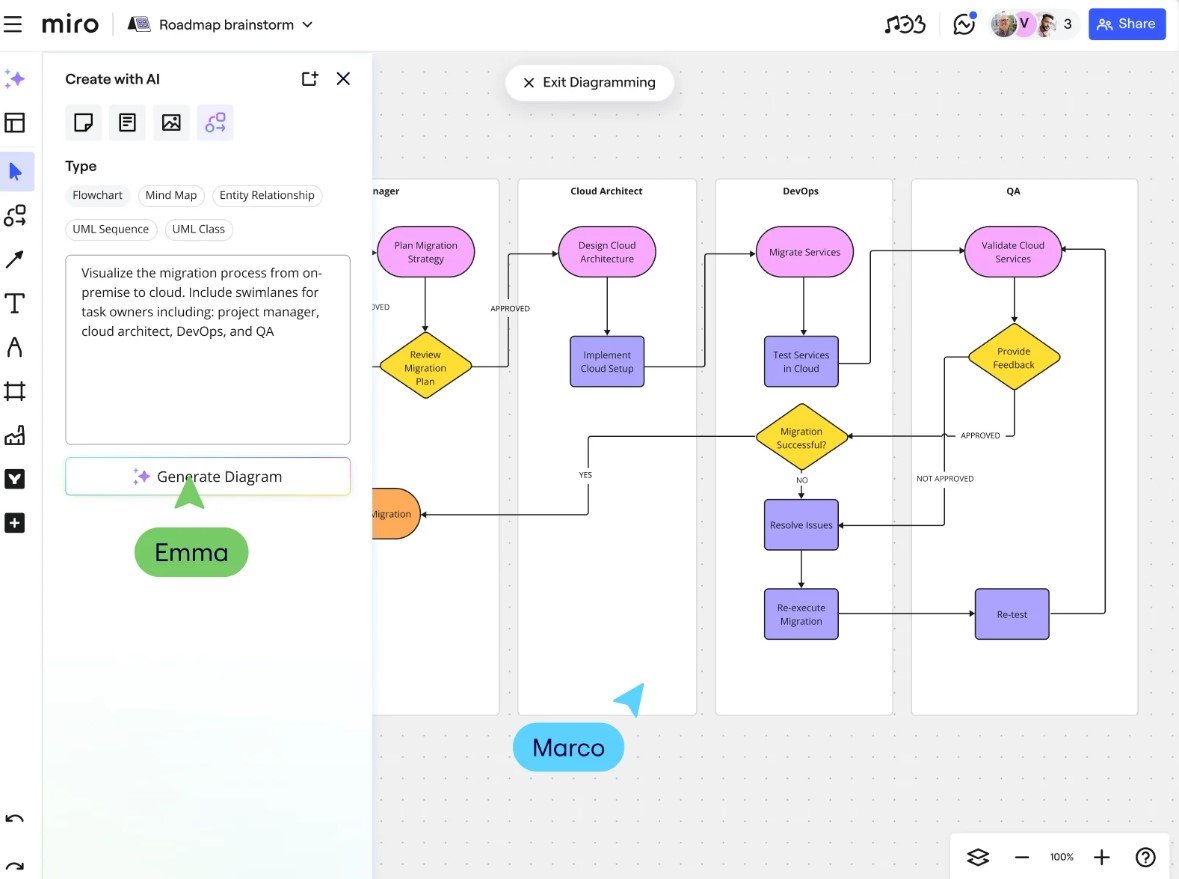

Although multimodal AI models have varied architectures, most frameworks have a few standard components. A typical architecture includes an encoder, a fusion mechanism, and a decoder.

Encoders

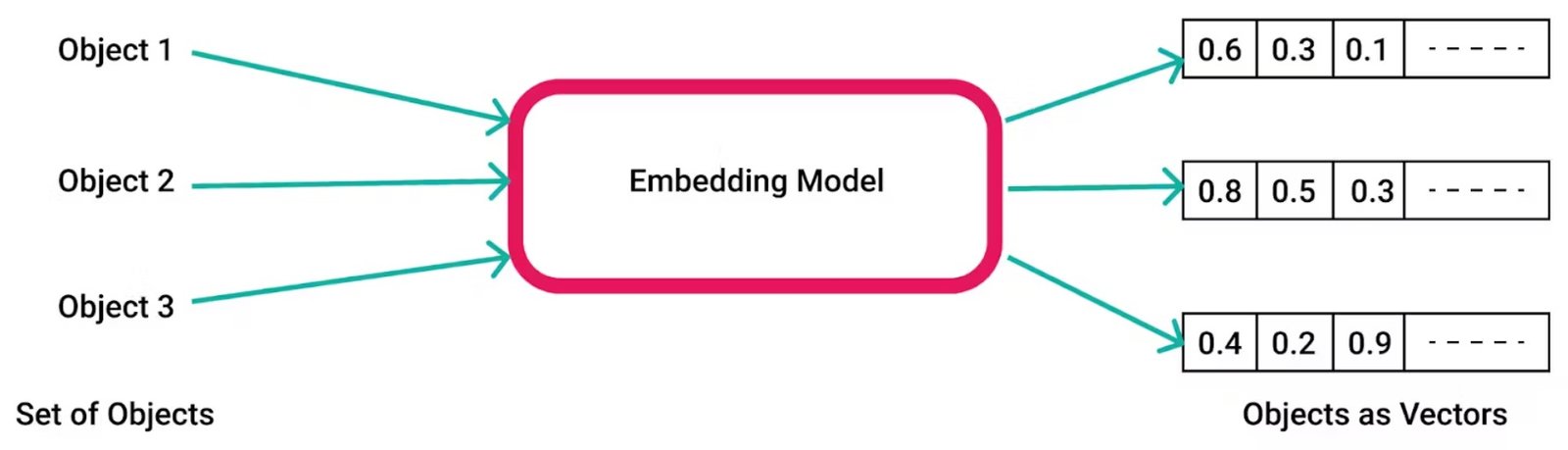

Multimodal AI models often have three types of encoders for each data type – image, text, and audio.

- Image Encoders: Convolutional neural networks (CNNs) and Vision Transformers (ViTs) are popular choices for image encoders. They convert image pixels into feature vectors to help the model understand critical image properties.

- Text Encoders: Text encoders transform text descriptions into embeddings that models can use for further processing. They often use transformer models like those in Generative Pre-Trained Transformer (GPT) frameworks.

- Audio Encoders: Audio encoders convert raw audio files into usable feature vectors that capture critical audio patterns, including rhythm, tone, and context. Wav2Vec2 and Whisper are popular choices for learning audio representations.

Fusion Mechanism Strategies

Once the encoders transform multiple modalities into embeddings, the next step is to combine them so the model can understand the broader context reflected in all data types.

Early Fusion

Combines all modalities before passing them to the model for processing.

Intermediate Fusion

Projects each modality onto a latent space and fuses the latent representations for further processing.

Late Fusion

Processes all modalities in their raw form and fuses the output for each.

Hybrid Fusion

Combines early, intermediate, and late fusion strategies at different model processing phases.

Fusion Mechanism Methods

- Attention-based Methods: Use transformer architecture to convert embeddings from multiple modalities into a query-key-value structure. Cross-modal attention frameworks fuse different modalities according to the inter-relationships between each data type.

- Concatenation: A straightforward fusion technique that merges multiple embeddings into a single feature representation.

- Dot-Product: Involves element-wise multiplication of feature vectors from different modalities to capture interactions and correlations.

Decoders

The last component is a decoder network that processes the feature vectors from different modalities to produce the required output. Decoders can contain cross-modal attention networks to focus on different parts of input data and produce relevant outputs. Recurrent neural networks (RNNs), Convolutional Neural Networks (CNNs), and Generative Adversarial Networks (GANs) are popular choices for constructing decoders.

Multimodal Models – Use Cases

With recent advancements in multimodal AI models, AI systems can perform complex tasks involving the simultaneous integration and interpretation of multiple modalities.

Top Multimodal AI Models in 2025

- Key Features: Real-time processing of audio, video, text, and image; 280ms average response time; supports over 100 languages

- Performance: Achieves 15% improvement over GPT-4o on text, reasoning, and coding benchmarks

- Use Cases: Generate nuanced content with tone, rhythm, and emotion; real-time translation; interactive assistants

- Key Features: Up to 5 million token context window; processes audio, video, text, and image data; three variants (Ultra, Pro, Nano)

- Performance: State-of-the-art performance on 20 cross-modal benchmarks

- Use Cases: Complex code generation; educational tools; on-device virtual assistants

- Key Features: Three variants (Haiku, Sonnet, Opus); processes over 2 million tokens; understands photos, charts, graphs

- Performance: SOTA performance on reasoning benchmarks; processes research papers in under 2 seconds

- Use Cases: Educational tool for complex diagrams; technical documentation analysis; data interpretation

- Key Features: Combines Vicuna-2 and CLIP; 95.2% accuracy on Science QA dataset; open-source

- Performance: SOTA performance in chat-related tasks with visual content

- Use Cases: Advanced chatbots for e-commerce; visual question answering; image-based search

- Key Features: Attention-based fusion; uses EVA2-CLIP-E visual encoder; open-source

- Performance: SOTA on 20 cross-modal benchmarks including image captioning and VQA

- Use Cases: Visual question answering; detailed image descriptions; visual grounding tasks

- Key Features: Combines eight modalities (text, video, audio, depth, thermal, IMU, tactile, olfactory); uses InfoNCE loss

- Performance: Can use any modality as input to generate output in any modality

- Use Cases: Generate promotional videos with desired audio; cross-modal retrieval; immersive experiences

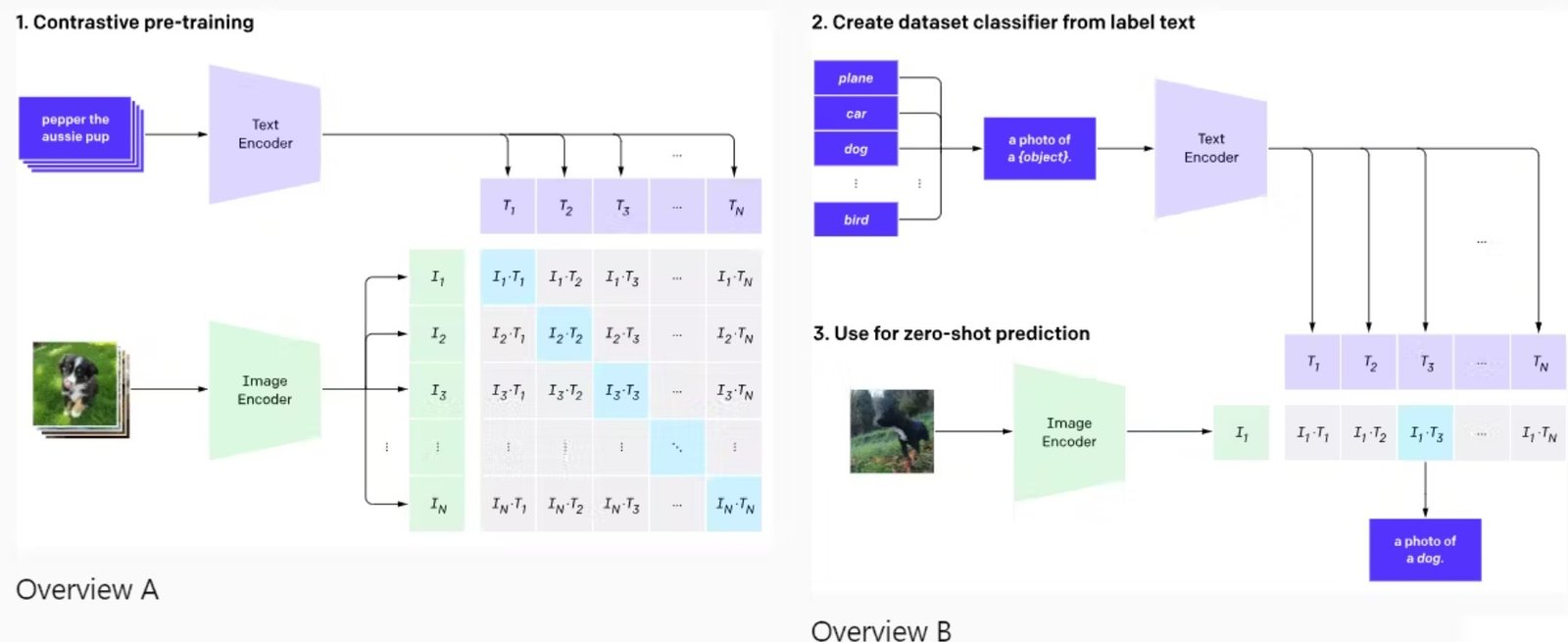

- Key Features: Enhanced contrastive framework; uses transformer-based text encoder and Vision Transformer; zero-shot capability

- Performance: 20% improvement in generalization to new data without task-specific fine-tuning

- Use Cases: Image annotation; image retrieval for AI-based search systems; textual descriptions from images

- Key Features: CLIP-2 based architecture; enhanced diffusion decoder; 18 billion parameters

- Performance: Can process up to 2560 tokens; generates photorealistic images from text prompts

- Use Cases: Generate abstract images; transform existing images; product visualization

- Key Features: Text-to-video and image-to-video generation; uses diffusion-based models; cross-attention mechanism

- Performance: Creates 4K realistic videos from textual and visual prompts

- Use Cases: Content creation; stylized video generation; video editing assistance

- Key Features: Processes videos, images, and text; few-shot learning; uses Perceiver Resampler

- Performance: 30% reduction in computational complexity for images and videos with extensive features

- Use Cases: Image captioning; classification; visual question answering

Model Performance Comparison

Challenges and Future Trends

Current Challenges

- Data Availability: Aligning datasets across modalities is complex and results in noise during multimodal AI learning. Mitigation strategies include using pre-trained foundation models, data augmentation, and few-shot learning.

- Data Annotation: Annotating multimodal AI data requires extensive expertise and resources. Solutions include third-party annotation tools and automated labeling algorithms.

- Model Complexity: Training multimodal models is computationally expensive and prone to overfitting. Strategies like knowledge distillation, quantization, and small language models can help.

- Ethical Concerns: Bias in multimodal systems can perpetuate stereotypes. Ensuring fairness and transparency remains a significant challenge.

Future Trends

- Advanced Fusion Techniques: New architectures that better integrate modalities at multiple levels of abstraction.

- Efficiency Improvements: Techniques to reduce computational requirements while maintaining performance.

- Explainable AI (XAI): Methods to understand model decisions, especially in cross-modal contexts.

- Specialized Hardware: AI chips optimized for multimodal processing to handle diverse data types efficiently.

- Regulation and Standards: Development of industry standards and regulatory frameworks for multimodal AI systems.

Multimodal Models: Key Takeaways

- Multimodal models are revolutionizing human-AI interaction by allowing implementation in complex environments requiring advanced understanding of real-world data.

- A typical architecture includes encoders to map raw data into feature vectors, a fusion strategy to consolidate modalities, and a decoder to generate relevant output.

- Popular fusion methods include attention-based techniques, concatenation, and dot-product approaches.

- Key use cases include visual question-answering, cross-modal search, generative AI, and image segmentation.

- Top models in 2025 include GPT-5o, Gemini 2.0, Claude 4, LLaVA-2, and CogVLM-2, each with unique capabilities and applications.

- Challenges include data availability, annotation complexity, model efficiency, and ethical considerations.

- Future trends point to more efficient architectures, better explainability, specialized hardware, and regulatory frameworks.